Microsoft exposes Storm-2139, a cybercrime community exploiting Azure AI by way of LLMjacking. Find out how stolen API keys enabled dangerous content material era and Microsoft’s authorized motion

Microsoft has taken authorized motion in opposition to a cybercriminal community, often known as Storm-2139, accountable for exploiting vulnerabilities inside its Azure AI companies. The corporate publicly recognized and condemned 4 people central to this illicit operation. Their names are as follows:

- Arian Yadegarnia aka “Fiz” of Iran

- Phát Phùng Tấn aka “Asakuri” of Vietnam

- Ricky Yuen aka “cg-dot” of Hong Kong, China

- Alan Krysiak aka “Drago” of the UK

In keeping with Microsoft’s official report, shared solely with Hackread.com, these people used varied on-line aliases to function a scheme referred to as LLMjacking. It’s the act of hijacking Massive Language Fashions (LLMs) by stealing API (Utility Programming Interface) keys, which act as digital credentials for accessing AI companies. If obtained, API keys can enable cybercriminals to govern the LLMs to generate dangerous content material.

Storm-2139’s core exercise concerned leveraging stolen buyer credentials, obtained from publicly out there sources, to realize unauthorized entry to AI platforms, modify the capabilities of those companies, circumvent built-in security measures, after which resell entry to different malicious actors. They offered detailed directions on learn how to generate illicit content material, together with non-consensual intimate photographs and sexually specific materials, usually concentrating on celebrities.

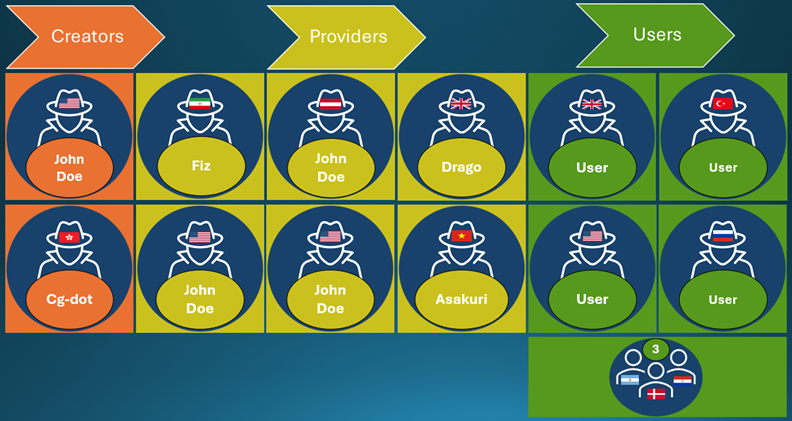

Microsoft’s Digital Crimes Unit (DCU) initiated authorized proceedings in December 2024, initially concentrating on ten unidentified people. By means of subsequent investigations, they recognized the important thing members of Storm-2139. The community operated by a structured mannequin, with creators growing the malicious instruments, suppliers modifying and distributing them, and customers producing the abusive content material.

“Storm-2139 is organized into three fundamental classes: creators, suppliers, and customers. Creators developed the illicit instruments that enabled the abuse of AI-generated companies. Suppliers then modified and provided these instruments to finish customers usually with various tiers of service and cost. Lastly, customers then used these instruments to generate violating artificial content material,” Microsoft’s weblog submit revealed.

The authorized actions taken by Microsoft, together with the seizure of a key web site, resulted in vital disruption to the community. Members of the group reacted with alarm, participating in on-line chatter, making an attempt to establish different members, and even resorting to doxing Microsoft’s authorized counsel, highlighting the effectiveness of Microsoft’s technique in dismantling the felony operation.

Microsoft employed a multi-faceted authorized technique, initiating civil litigation to disrupt the community’s operations and pursuing felony referrals to regulation enforcement businesses. This strategy aimed to each halt the rapid risk and set up a deterrent in opposition to future AI misuse.

The corporate can also be addressing the problem of AI misuse to generate dangerous content material, implementing stringent guardrails and growing new strategies to guard customers. It additionally advocates for modernizing felony regulation to equip regulation enforcement with the mandatory instruments to fight AI misuse.

Safety specialists have highlighted the significance of stronger credential safety and steady monitoring in stopping such assaults. Rom Carmel, Co-Founder and CEO at Apono advised Hackread that firms who use AI and cloud instruments for development should restrict entry to delicate knowledge to cut back safety dangers.

“As organizations undertake AI instruments to drive development, in addition they broaden their assault floor with purposes holding delicate knowledge. To securely leverage AI and the cloud, entry to delicate techniques ought to be restricted on a need-to-use foundation, minimizing alternatives for malicious actors.“

High/Featured Picture by way of Pixabay/BrownMantis