Synthetic Intelligence & Machine Studying

,

Subsequent-Technology Applied sciences & Safe Improvement

,

The Way forward for AI & Cybersecurity

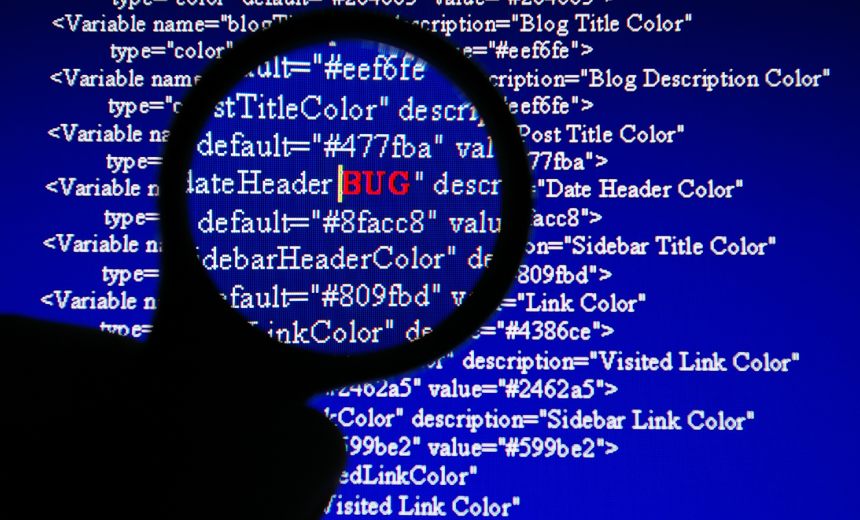

Vulnerability Researchers: Begin Monitoring LLM Capabilities, Says Veteran Bug Hunter

A vulnerability researcher stated massive language fashions have taken a giant step ahead of their capacity to assist chase down code flaws.

See Additionally: On Demand | World Incident Response Report 2025

Veteran London-based bug hunter Sean Heelan stated he is been reviewing frontier synthetic intelligence fashions to see if they have the chops to identify vulnerabilities, and located success in utilizing OpenAI’s o3 mannequin, launched in April. It found CVE-2025-37899, a remotely exploitable zero-day vulnerability within the Linux kernel’s server message block protocol, a community communication protocol for sharing recordsdata, printers and different sources on a community.

“With o3, LLMs have made a leap ahead of their capacity to motive about code, and should you work in vulnerability analysis it’s best to begin paying shut consideration,” Heelan stated in a weblog submit. “Should you’re an expert-level vulnerability researcher or exploit developer, the machines aren’t about to interchange you. In reality, it’s fairly the other: they’re now at a stage the place they’ll make you considerably extra environment friendly and efficient.”

As that assertion suggests, a number of caveats apply. Heelan’s success at utilizing o3 to seek out CVE-2025-37899 – “a use-after-free within the handler for the SMB ‘logoff’ command” – seems to hint again, in no small half, to his experience as a bug hunter.

Notably, he’d already found the same CVE-2025-37778 vulnerability in KSMBD, the SMB3 Kernel Server constructed into Linux, involving a dangling authentication pointer not being set to null.

Heelan set o3 unfastened on among the KSMBD code to see the way it “would carry out had been it the backend for a hypothetical vulnerability detection system,” in addition to what code and directions it will should be proven to make that occur.

The LLM could not be given entry to the whole code base, because of “context window limitations and regressions in efficiency that happen as the quantity of context will increase” He first wanted to create a system to obviously describe what wanted to be analyzed and the way.

Correctly cued, o3 discovered the vulnerability, utilizing detailed reasoning to realize this consequence. “Understanding the vulnerability requires reasoning about concurrent connections to the server, and the way they could share varied objects in particular circumstances,” Heelan stated. The LLM “was capable of comprehend this and spot a location the place a specific object – that isn’t referenced counted – is freed whereas nonetheless being accessible by one other thread. So far as I am conscious, that is the primary public dialogue of a vulnerability of that nature being discovered by a LLM.”

LLM Benchmarks Spotlight Enhancements

The vulnerability o3 found is an efficient benchmark for testing what the LLM can do. “Whereas it’s not trivial, it’s also not insanely sophisticated,” Heelan stated. The researcher stated he might “stroll a colleague by the whole code-path in 10 minutes,” with no further Linux kernel, SMB protocol or different data required.

O3 generally additionally discovered the right answer to fixing the issue, though in different instances it offered an faulty answer. One quirk is that Heelan initially and independently got here up with the identical repair first proposed by o3, solely later realizing it would not work as a result of SMB protocol permitting “two completely different connections to ‘bind’ to the identical session,” and having no option to block an attacker from exploiting it, even with an answer that set it to null.

As that implies, the vulnerability remediation course of cannot but be absolutely automated.

One problem includes success charges. Heelan stated he ran his take a look at 100 occasions, every time utilizing the identical 12,000 strains of code – “combining the code for the entire handlers with the connection setup and teardown code, in addition to the command handler dispatch routines” – which ended up being equal to about 100,000 enter tokens, which was o3’s most. A token refers to items of phrases utilized in pure language processing, which averages out to about 4 characters per token.

The overall value of his roughly 100 take a look at runs: $116.

Heelan stated o3 discovered the vulnerability within the benchmark eight of these 100 runs, concluded there was no flaw in 66 runs and for the remaining 23 runs generated false positives. This was an enchancment on checks he ran utilizing Anthropic’s Claude Sonnet 3.7, launched in February, which discovered the flaw in three of its 100 runs, whereas Claude 3.5 did not discover it in any respect.

As these outcomes reveal, one predominant takeaway is that “o3 just isn’t infallible,” and “there’s nonetheless a considerable probability it’s going to generate nonsensical outcomes and frustrate you,” he stated.

What’s new is that “the prospect of getting appropriate outcomes is sufficiently excessive sufficient that it’s value your time and your effort to attempt to apply it to actual issues,” he stated. “When you have an issue that may be represented in fewer than 10k strains of code there’s a cheap probability o3 can both clear up it, or aid you clear up it.”

Making Professionals Extra Environment friendly

Heelan’s discovering that AI instruments would possibly truly improve expertise professionals’ capacity to do their job is not an outlier.

Talking final month on the RSAC Convention in San Francisco, Chris Wysopal, co-founder and chief safety evangelist at Veracode, stated builders on common ship 50% extra code after they use AI-enhanced software program improvement instruments, with Google and Microsoft reporting a 3rd of their new code is now AI-generated.

One wrinkle is that AI instruments are educated on what real-world builders do, and produces code containing an equal amount of vulnerabilities as classically constructed code. Extra code, extra vulnerabilities.

Wysopal stated the – clearly ironic – answer to this “is to make use of extra AI.” Particularly, LLMs educated on safe code examples, in order that they acknowledge unhealthy code and know the way to repair it (see: Unpacking the Impact of AI on Safe Code Improvement).

Heelan’s analysis exhibits how the newest frontier AI fashions is likely to be delivered to bear to repair sure kinds of code, partly by specialists creating well-built fashions designed to allow an LLM to evaluate a specific sort of performance, software or protocol.

“My prediction is that well-designed techniques find yourself being simply as – if not extra vital – than the elevated intelligence of the fashions,” stated AI and cybersecurity researcher Daniel Miessler in his newest Unsupervised Studying publication on Wednesday, responding to Heelan’s analysis.

“Consider it this fashion: the extra context/reminiscence and guided construction a given AI has to resolve an issue, the much less good it must be,” Miessler stated. “So when o3 or no matter finds its first zero-day, that is cool, nevertheless it’s nothing in comparison with what it might do with 100 occasions the context and a brilliant clear description of the life and work and technique of a safety researcher who does that for a dwelling.”