The first Gemma mannequin launched early final yr and has since grown right into a thriving Gemmaverse of over 160 million collective downloads. This ecosystem contains our household of over a dozen specialised fashions for all the pieces from safeguarding to medical purposes and, most inspiringly, the numerous improvements from the neighborhood. From innovators like Roboflow constructing enterprise laptop imaginative and prescient to the Institute of Science Tokyo creating highly-capable Japanese Gemma variants, your work has proven us the trail ahead.

Constructing on this unbelievable momentum, we’re excited to announce the complete launch of Gemma 3n. Whereas final month’s preview provided a glimpse, at this time unlocks the complete energy of this mobile-first structure. Gemma 3n is designed for the developer neighborhood that helped form Gemma. It’s supported by your favourite instruments together with Hugging Face Transformers, llama.cpp, Google AI Edge, Ollama, MLX, and lots of others, enabling you to fine-tune and deploy on your particular on-device purposes with ease. This submit is the developer deep dive: we’ll discover among the improvements behind Gemma 3n, share new benchmark outcomes, and present you the best way to begin constructing at this time.

What’s new in Gemma 3n?

Gemma 3n represents a significant development for on-device AI, bringing highly effective multimodal capabilities to edge gadgets with efficiency beforehand solely seen in final yr’s cloud-based frontier fashions.

Reaching this leap in on-device efficiency required rethinking the mannequin from the bottom up. The muse is Gemma 3n’s distinctive mobile-first structure, and all of it begins with MatFormer.

MatFormer: One mannequin, many sizes

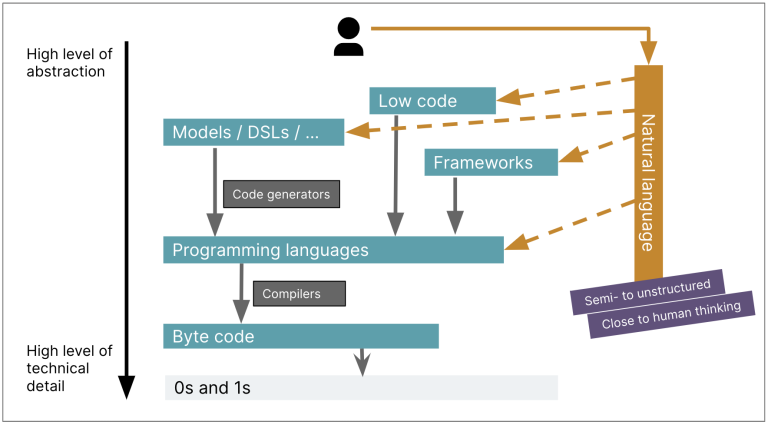

On the core of Gemma 3n is the MatFormer (🪆Matryoshka Transformer) structure, a novel nested transformer constructed for elastic inference. Consider it like Matryoshka dolls: a bigger mannequin comprises smaller, absolutely practical variations of itself. This method extends the idea of Matryoshka Illustration Studying from simply embeddings to all transformer parts.

Throughout the MatFormer coaching of the 4B efficient parameter (E4B) mannequin, a 2B efficient parameter (E2B) sub-model is concurrently optimized inside it, as proven within the determine above. This supplies builders two highly effective capabilities and use circumstances at this time:

1: Pre-extracted fashions: You’ll be able to instantly obtain and use both the principle E4B mannequin for the very best capabilities, or the standalone E2B sub-model which we have now already extracted for you, providing as much as 2x sooner inference.

2: Customized sizes with Combine-n-Match: For extra granular management tailor-made to particular {hardware} constraints, you’ll be able to create a spectrum of custom-sized fashions between E2B and E4B utilizing a technique we name Combine-n-Match. This method permits you to exactly slice the E4B mannequin’s parameters, primarily by adjusting the feed ahead community hidden dimension per layer (from 8192 to 16384) and selectively skipping some layers. We’re releasing the MatFormer Lab, a software that exhibits the best way to retrieve these optimum fashions, which had been recognized by evaluating varied settings on benchmarks like MMLU.

MMLU scores for the pre-trained Gemma 3n checkpoints at completely different mannequin sizes (utilizing Combine-n-Match)

Wanting forward, the MatFormer structure additionally paves the best way for elastic execution. Whereas not a part of at this time’s launched implementations, this functionality permits a single deployed E4B mannequin to dynamically swap between E4B and E2B inference paths on the fly, enabling real-time optimization of efficiency and reminiscence utilization based mostly on the present activity and gadget load.

Per-Layer Embeddings (PLE): Unlocking extra reminiscence effectivity

Gemma 3n fashions incorporate Per-Layer Embeddings (PLE). This innovation is tailor-made for on-device deployment because it dramatically improves mannequin high quality with out rising the high-speed reminiscence footprint required in your gadget’s accelerator (GPU/TPU).

Whereas the Gemma 3n E2B and E4B fashions have a complete parameter depend of 5B and 8B respectively, PLE permits a good portion of those parameters (the embeddings related to every layer) to be loaded and computed effectively on the CPU. This implies solely the core transformer weights (roughly 2B for E2B and 4B for E4B) want to sit down within the sometimes extra constrained accelerator reminiscence (VRAM).

With Per-Layer Embeddings, you should use Gemma 3n E2B whereas solely having ~2B parameters loaded in your accelerator.

KV Cache sharing: Sooner long-context processing

Processing lengthy inputs, such because the sequences derived from audio and video streams, is important for a lot of superior on-device multimodal purposes. Gemma 3n introduces KV Cache Sharing, a characteristic designed to considerably speed up time-to-first-token for streaming response purposes.

KV Cache Sharing optimizes how the mannequin handles the preliminary enter processing stage (typically known as the “prefill” part). The keys and values of the center layer from native and world consideration are instantly shared with all the highest layers, delivering a notable 2x enchancment on prefill efficiency in comparison with Gemma 3 4B. This implies the mannequin can ingest and perceive prolonged immediate sequences a lot sooner than earlier than.

Audio understanding: Introducing speech to textual content and translation

Gemma 3n makes use of a complicated audio encoder based mostly on the Common Speech Mannequin (USM). The encoder generates a token for each 160ms of audio (about 6 tokens per second), that are then built-in as enter to the language mannequin, offering a granular illustration of the sound context.

This built-in audio functionality unlocks key options for on-device improvement, together with:

- Automated Speech Recognition (ASR): Allow high-quality speech-to-text transcription instantly on the gadget.

- Automated Speech Translation (AST): Translate spoken language into textual content in one other language.

We have noticed significantly robust AST outcomes for translation between English and Spanish, French, Italian, and Portuguese, providing nice potential for builders focusing on purposes in these languages. For duties like speech translation, leveraging Chain-of-Thought prompting can considerably improve outcomes. Right here’s an instance:

consumer

Transcribe the next speech phase in Spanish, then translate it into English:

mannequin Plain textual content

At launch time, the Gemma 3n encoder is carried out to course of audio clips as much as 30 seconds. Nevertheless, this isn’t a basic limitation. The underlying audio encoder is a streaming encoder, able to processing arbitrarily lengthy audios with further lengthy type audio coaching. Comply with-up implementations will unlock low-latency, lengthy streaming purposes.

MobileNet-V5: New state-of-the-art imaginative and prescient encoder

Alongside its built-in audio capabilities, Gemma 3n includes a new, extremely environment friendly imaginative and prescient encoder, MobileNet-V5-300M, delivering state-of-the-art efficiency for multimodal duties on edge gadgets.

Designed for flexibility and energy on constrained {hardware}, MobileNet-V5 offers builders:

- A number of enter resolutions: Natively helps resolutions of 256×256, 512×512, and 768×768 pixels, permitting you to steadiness efficiency and element on your particular purposes.

- Broad visible understanding: Co-trained on intensive multimodal datasets, it excels at a variety of picture and video comprehension duties.

- Excessive throughput: Processes as much as 60 frames per second on a Google Pixel, enabling real-time, on-device video evaluation and interactive experiences.

This degree of efficiency is achieved with a number of architectural improvements, together with:

- A complicated basis of MobileNet-V4 blocks (together with Common Inverted Bottlenecks and Cellular MQA).

- A considerably scaled up structure, that includes a hybrid, deep pyramid mannequin that’s 10x bigger than the largest MobileNet-V4 variant.

- A novel Multi-Scale Fusion VLM adapter that enhances the standard of tokens for higher accuracy and effectivity.

Benefiting from novel architectural designs and superior distillation strategies, MobileNet-V5-300M considerably outperforms the baseline SoViT in Gemma 3 (skilled with SigLip, no distillation). On a Google Pixel Edge TPU, it delivers a 13x speedup with quantization (6.5x with out), requires 46% fewer parameters, and has a 4x smaller reminiscence footprint, all whereas offering considerably larger accuracy on vision-language duties

We’re excited to share extra in regards to the work behind this mannequin. Look out for our upcoming MobileNet-V5 technical report, which is able to deep dive into the mannequin structure, knowledge scaling methods, and superior distillation strategies.

Making Gemma 3n accessible from day one has been a precedence. We’re proud to companion with many unbelievable open supply builders to make sure broad help throughout fashionable instruments and platforms, together with contributions from groups behind AMD, Axolotl, Docker, Hugging Face, llama.cpp, LMStudio, MLX, NVIDIA, Ollama, RedHat, SGLang, Unsloth, and vLLM.

However this ecosystem is just the start. The true energy of this expertise is in what you’ll construct with it. That’s why we’re launching the Gemma 3n Affect Problem. Your mission: use Gemma 3n’s distinctive on-device, offline, and multimodal capabilities to construct a product for a greater world. With $150,000 in prizes, we’re on the lookout for a compelling video story and a “wow” issue demo that exhibits real-world affect. Be part of the problem and assist construct a greater future.

Get began with Gemma 3n at this time

Able to discover the potential of Gemma 3n at this time? Here is how:

- Experiment instantly: Use Google AI Studio to strive Gemma 3n in simply a few clicks. Gemma fashions will also be deployed on to Cloud Run from AI Studio.

- Be taught & combine: Dive into our complete documentation to rapidly combine Gemma into your initiatives or begin with our inference and fine-tuning guides.