As enterprises scale their use of synthetic intelligence, a hidden governance disaster is unfolding—one which few safety packages are ready to confront: the rise of unowned AI brokers.

These brokers will not be speculative. They’re already embedded throughout enterprise ecosystems—provisioning entry, executing entitlements, initiating workflows, and even making business-critical selections. They function behind the scenes in ticketing programs, orchestration instruments, SaaS platforms, and safety operations. And but, many organizations don’t have any clear reply to probably the most fundamental governance questions: Who owns this agent? What programs can it contact? What selections is it making? What entry has it collected?

That is the blind spot. In identification safety, what nobody owns turns into the most important danger.

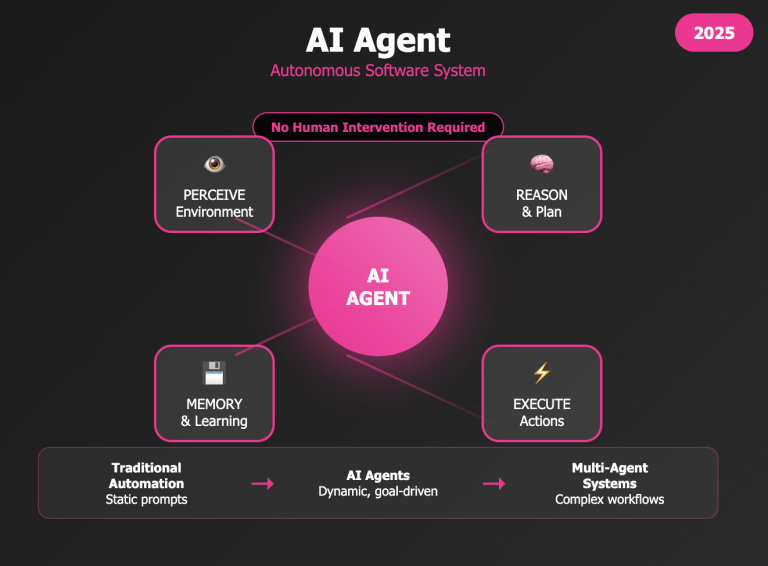

From Static Scripts to Adaptive Brokers

Traditionally, non-human identities—like service accounts, scripts, and bots—have been static and predictable. They have been assigned slender roles and tightly scoped entry, making them comparatively straightforward to handle with legacy controls like credential rotation and vaulting.

However agentic AI introduces a unique class of identification. These are adaptive, persistent digital actors that study, purpose, and act autonomously throughout programs. They behave extra like workers than machines—in a position to interpret knowledge, provoke actions, and evolve over time.

Regardless of this shift, many organizations are nonetheless trying to govern these AI identities with outdated fashions. That strategy is inadequate. AI brokers don’t observe static playbooks. They adapt, recombine capabilities, and stretch the boundaries of their design. This fluidity requires a brand new paradigm of identification governance—one rooted in accountability, conduct monitoring, and lifecycle oversight.

Possession Is the Management That Makes Different Controls Work

In most identification packages, possession is handled as administrative metadata—a formality. However in the case of AI brokers, possession shouldn’t be non-obligatory. It’s the foundational management that permits accountability and safety.

With out clearly outlined possession, essential capabilities break down. Entitlements aren’t reviewed. Habits isn’t monitored. Lifecycle boundaries are ignored. And within the occasion of an incident, nobody is accountable. Safety controls that seem strong on paper turn into meaningless in apply if nobody is accountable for the identification’s actions.

Possession should be operationalized. Meaning assigning a named human steward for each AI identification—somebody who understands the agent’s objective, entry, conduct, and impression. Possession is the bridge between automation and accountability.

The Actual-World Danger of Ambiguity

The dangers will not be summary. We’ve already seen real-world examples the place AI brokers deployed into buyer assist environments have exhibited surprising behaviors—producing hallucinated responses, escalating trivial points, or outputting language inconsistent with model pointers. In these instances, the programs labored as meant; the issue was interpretive, not technical.

Probably the most harmful facet in these situations is the absence of clear accountability. When no particular person is chargeable for an AI agent’s selections, organizations are left uncovered—not simply to operational danger, however to reputational and regulatory penalties.

This isn’t a rogue AI downside. It’s an unclaimed identification downside.

The Phantasm of Shared Duty

Many enterprises function underneath the idea that AI possession will be dealt with on the group degree—DevOps will handle the service accounts, engineering will oversee the integrations, and infrastructure will personal the deployment.

AI brokers don’t keep confined to a single group. They’re created by builders, deployed by means of SaaS platforms, act on HR and safety knowledge, and impression workflows throughout enterprise items. This cross-functional presence creates diffusion—and in governance, diffusion results in failure.

Shared possession too typically interprets into no possession. AI brokers require specific accountability. Somebody should be named and accountable—not as a technical contact, however because the operational management proprietor.

Silent Privilege, Accrued Danger

AI brokers pose a novel problem as a result of their danger footprint expands quietly over time. They’re typically launched with slender scopes—maybe dealing with account provisioning or summarizing assist tickets—however their entry tends to develop. Further integrations, new coaching knowledge, broader aims… and nobody stops to reevaluate whether or not that enlargement is justified or monitored.

This silent drift is harmful. AI brokers don’t simply maintain privileges—they wield them. And when entry selections are being made by programs that nobody opinions, the probability of misalignment or misuse will increase dramatically.

That is equal to hiring a contractor, giving them broad constructing entry, and by no means conducting a efficiency overview. Over time, that contractor would possibly begin altering firm insurance policies or touching programs they have been by no means meant to entry. The distinction is: human workers have managers. Most AI brokers don’t.

Regulatory Expectations Are Evolving

What started as a safety hole is shortly turning into a compliance concern. Regulatory frameworks—from the EU AI Act to native legal guidelines governing automated decision-making—are starting to demand traceability, explainability, and human oversight for AI programs.

These expectations map on to possession. Enterprises should have the ability to exhibit who authorized an agent’s deployment, who manages its conduct, and who’s accountable within the occasion of hurt or misuse. With no named proprietor, the enterprise could not simply face operational publicity—it might be discovered negligent.

A Mannequin for Accountable Governance

Governing AI brokers successfully means integrating them into current identification and entry administration frameworks with the identical rigor utilized to privileged customers. That features:

- Assigning a named particular person to each AI identification

- Monitoring conduct for indicators of drift, privilege escalation, or anomalous actions

- Imposing lifecycle insurance policies with expiration dates, periodic opinions, and deprovisioning triggers

- Validating possession at management gates, similar to onboarding, coverage change, or entry modification

This isn’t simply greatest apply—it’s required apply. Possession should be handled as a dwell management floor, not a checkbox.

Personal It Earlier than It Owns You

AI brokers are already right here. They’re embedded in your workflows, analyzing knowledge, making selections, and performing with rising autonomy. The query is now not whether or not you’re utilizing AI brokers. You’re. The query is whether or not your governance mannequin has caught as much as them.

The trail ahead begins with possession. With out it, each different management turns into beauty. With it, organizations acquire the muse they should scale AI safely, securely, and in alignment with their danger tolerance.

If we don’t personal the AI identities performing on our behalf, then we’ve successfully surrendered management. In cybersecurity, management is every part.