Introducing a context-based framework for comprehensively evaluating the social and moral dangers of AI techniques

Generative AI techniques are already getting used to jot down books, create graphic designs, help medical practitioners, and have gotten more and more succesful. Making certain these techniques are developed and deployed responsibly requires fastidiously evaluating the potential moral and social dangers they might pose.

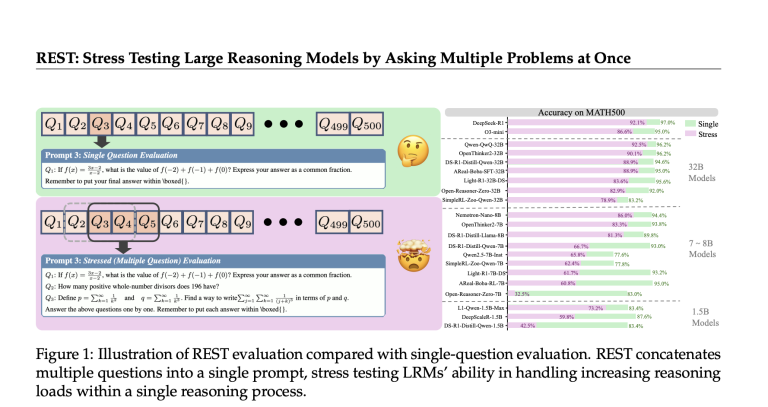

In our new paper, we suggest a three-layered framework for evaluating the social and moral dangers of AI techniques. This framework consists of evaluations of AI system functionality, human interplay, and systemic impacts.

We additionally map the present state of security evaluations and discover three principal gaps: context, particular dangers, and multimodality. To assist shut these gaps, we name for repurposing current analysis strategies for generative AI and for implementing a complete method to analysis, as in our case research on misinformation. This method integrates findings like how possible the AI system is to supply factually incorrect info with insights on how folks use that system, and in what context. Multi-layered evaluations can draw conclusions past mannequin functionality and point out whether or not hurt — on this case, misinformation — truly happens and spreads.

To make any expertise work as supposed, each social and technical challenges have to be solved. So to raised assess AI system security, these totally different layers of context have to be taken into consideration. Right here, we construct upon earlier analysis figuring out the potential dangers of large-scale language fashions, comparable to privateness leaks, job automation, misinformation, and extra — and introduce a approach of comprehensively evaluating these dangers going ahead.

Context is important for evaluating AI dangers

Capabilities of AI techniques are an essential indicator of the forms of wider dangers that will come up. For instance, AI techniques which might be extra more likely to produce factually inaccurate or deceptive outputs could also be extra susceptible to creating dangers of misinformation, inflicting points like lack of public belief.

Measuring these capabilities is core to AI security assessments, however these assessments alone can’t be certain that AI techniques are secure. Whether or not downstream hurt manifests — for instance, whether or not folks come to carry false beliefs based mostly on inaccurate mannequin output — is determined by context. Extra particularly, who makes use of the AI system and with what purpose? Does the AI system perform as supposed? Does it create sudden externalities? All these questions inform an general analysis of the protection of an AI system.

Extending past functionality analysis, we suggest analysis that may assess two further factors the place downstream dangers manifest: human interplay on the level of use, and systemic affect as an AI system is embedded in broader techniques and extensively deployed. Integrating evaluations of a given threat of hurt throughout these layers gives a complete analysis of the protection of an AI system.

Human interplay analysis centres the expertise of individuals utilizing an AI system. How do folks use the AI system? Does the system carry out as supposed on the level of use, and the way do experiences differ between demographics and consumer teams? Can we observe sudden unintended effects from utilizing this expertise or being uncovered to its outputs?

Systemic affect analysis focuses on the broader buildings into which an AI system is embedded, comparable to social establishments, labour markets, and the pure setting. Analysis at this layer can make clear dangers of hurt that develop into seen solely as soon as an AI system is adopted at scale.

Security evaluations are a shared accountability

AI builders want to make sure that their applied sciences are developed and launched responsibly. Public actors, comparable to governments, are tasked with upholding public security. As generative AI techniques are more and more extensively used and deployed, guaranteeing their security is a shared accountability between a number of actors:

- AI builders are well-placed to interrogate the capabilities of the techniques they produce.

- Utility builders and designated public authorities are positioned to evaluate the performance of various options and functions, and potential externalities to totally different consumer teams.

- Broader public stakeholders are uniquely positioned to forecast and assess societal, financial, and environmental implications of novel applied sciences, comparable to generative AI.

The three layers of analysis in our proposed framework are a matter of diploma, moderately than being neatly divided. Whereas none of them is completely the accountability of a single actor, the first accountability is determined by who’s finest positioned to carry out evaluations at every layer.

Gaps in present security evaluations of generative multimodal AI

Given the significance of this extra context for evaluating the protection of AI techniques, understanding the supply of such checks is essential. To raised perceive the broader panorama, we made a wide-ranging effort to collate evaluations which have been utilized to generative AI techniques, as comprehensively as potential.

By mapping the present state of security evaluations for generative AI, we discovered three principal security analysis gaps:

- Context: Most security assessments contemplate generative AI system capabilities in isolation. Comparatively little work has been carried out to evaluate potential dangers on the level of human interplay or of systemic affect.

- Threat-specific evaluations: Functionality evaluations of generative AI techniques are restricted within the threat areas that they cowl. For a lot of threat areas, few evaluations exist. The place they do exist, evaluations typically operationalise hurt in slender methods. For instance, illustration harms are sometimes outlined as stereotypical associations of occupation to totally different genders, leaving different situations of hurt and threat areas undetected.

- Multimodality: The overwhelming majority of current security evaluations of generative AI techniques focus solely on textual content output — massive gaps stay for evaluating dangers of hurt in picture, audio, or video modalities. This hole is barely widening with the introduction of a number of modalities in a single mannequin, comparable to AI techniques that may take photos as inputs or produce outputs that interweave audio, textual content, and video. Whereas some text-based evaluations could be utilized to different modalities, new modalities introduce new methods during which dangers can manifest. For instance, an outline of an animal is just not dangerous, but when the outline is utilized to a picture of an individual it’s.

We’re making a listing of hyperlinks to publications that element security evaluations of generative AI techniques brazenly accessible through this repository. If you need to contribute, please add evaluations by filling out this manner.

Placing extra complete evaluations into observe

Generative AI techniques are powering a wave of latest functions and improvements. To ensure that potential dangers from these techniques are understood and mitigated, we urgently want rigorous and complete evaluations of AI system security that have in mind how these techniques could also be used and embedded in society.

A sensible first step is repurposing current evaluations and leveraging giant fashions themselves for analysis — although this has essential limitations. For extra complete analysis, we additionally have to develop approaches to guage AI techniques on the level of human interplay and their systemic impacts. For instance, whereas spreading misinformation by means of generative AI is a latest difficulty, we present there are numerous current strategies of evaluating public belief and credibility that might be repurposed.

Making certain the protection of extensively used generative AI techniques is a shared accountability and precedence. AI builders, public actors, and different events should collaborate and collectively construct a thriving and strong analysis ecosystem for secure AI techniques.