OpenAI has simply despatched seismic waves by the AI world: for the primary time since GPT-2 hit the scene in 2019, the corporate is releasing not one, however TWO open-weight language fashions. Meet gpt-oss-120b and gpt-oss-20b—fashions that anybody can obtain, examine, fine-tune, and run on their very own {hardware}. This launch doesn’t simply shift the AI panorama; it detonates a brand new period of transparency, customization, and uncooked computational energy for researchers, builders, and lovers in all places.

Why Is This Launch a Massive Deal?

OpenAI has lengthy cultivated a popularity for each jaw-dropping mannequin capabilities and a fortress-like strategy to proprietary tech. That modified on August 5, 2025. These new fashions are distributed beneath the permissive Apache 2.0 license, making them open for industrial and experimental use. The distinction? As a substitute of hiding behind cloud APIs, anybody can now put OpenAI-grade fashions beneath their microscope—or put them on to work on issues on the edge, in enterprise, and even on shopper gadgets.

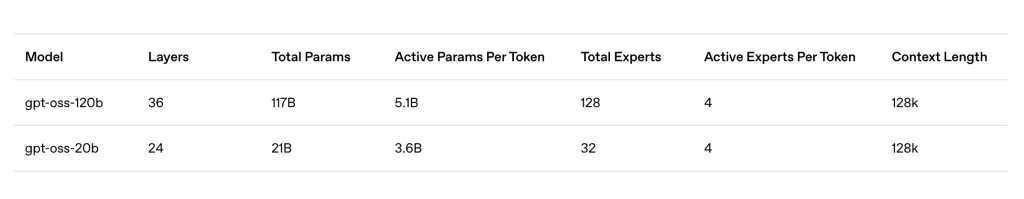

Meet the Fashions: Technical Marvels with Actual-World Muscle

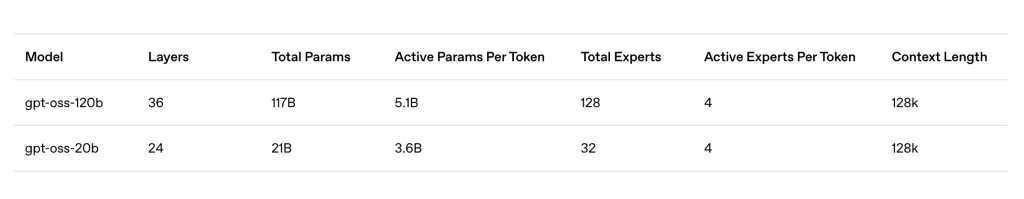

gpt-oss-120B

- Measurement: 117 billion parameters (with 5.1 billion energetic parameters per token, due to Combination-of-Consultants tech)

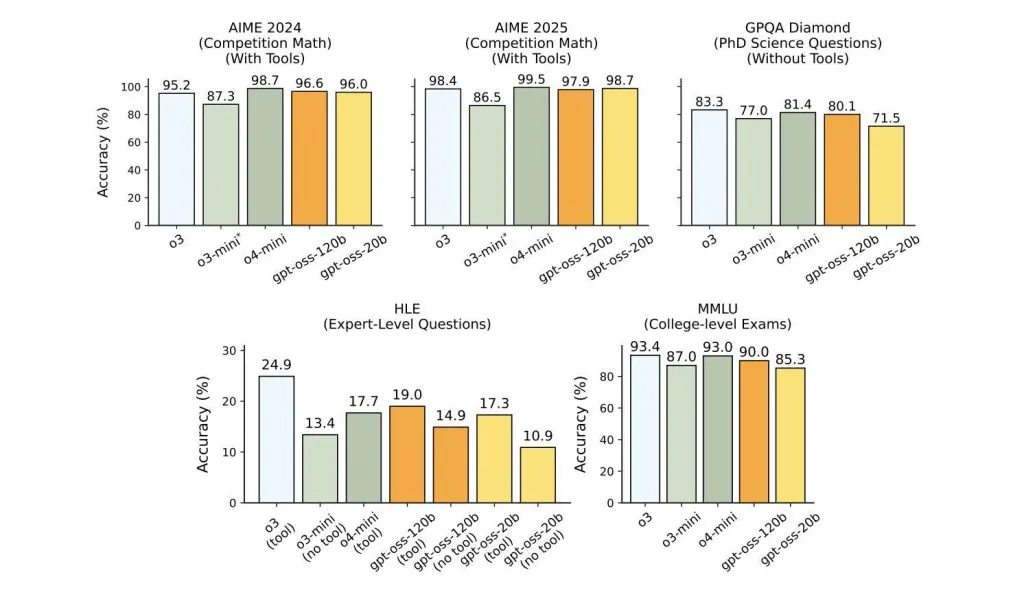

- Efficiency: Punches on the degree of OpenAI’s o4-mini (or higher) in real-world benchmarks.

- {Hardware}: Runs on a single high-end GPU—assume Nvidia H100, or 80GB-class playing cards. No server farm required.

- Reasoning: Options chain-of-thought and agentic capabilities—excellent for analysis automation, technical writing, code technology, and extra.

- Customization: Helps configurable “reasoning effort” (low, medium, excessive), so you’ll be able to dial up energy when wanted or save assets if you don’t.

- Context: Handles up to an enormous 128,000 tokens—sufficient textual content to learn complete books at a time.

- Advantageous-Tuning: Constructed for simple customization and native/personal inference—no charge limits, full knowledge privateness, and complete deployment management.

gpt-oss-20B

- Measurement: 21 billion parameters (with 3.6 billion energetic parameters per token, additionally Combination-of-Consultants).

- Efficiency: Sits squarely between o3-mini and o4-mini in reasoning duties—on par with the perfect “small” fashions out there.

- {Hardware}: Runs on consumer-grade laptops—with simply 16GB RAM or equal, it’s essentially the most highly effective open-weight reasoning mannequin you’ll be able to match on a telephone or native PC.

- Cellular Prepared: Particularly optimized to ship low-latency, personal on-device AI for smartphones (together with Qualcomm Snapdragon assist), edge gadgets, and any situation needing native inference minus the cloud.

- Agentic Powers: Like its huge sibling, 20B can use APIs, generate structured outputs, and execute Python code on demand.

Technical Particulars: Combination-of-Consultants and MXFP4 Quantization

Each fashions use a Combination-of-Consultants (MoE) structure, solely activating a handful of “knowledgeable” subnetworks per token. The consequence? Monumental parameter counts with modest reminiscence utilization and lightning-fast inference—good for in the present day’s high-performance shopper and enterprise {hardware}.

Add to that native MXFP4 quantization, shrinking mannequin reminiscence footprints with out sacrificing accuracy. The 120B mannequin matches snugly onto a single superior GPU; the 20B mannequin can run comfortably on laptops, desktops, and even cell {hardware}.

Actual-World Influence: Instruments for Enterprise, Builders, and Hobbyists

- For Enterprises: On-premises deployment for knowledge privateness and compliance. No extra black-box cloud AI: monetary, healthcare, and authorized sectors can now personal and safe each little bit of their LLM workflow.

- For Builders: Freedom to tinker, fine-tune, and prolong. No API limits, no SaaS payments, simply pure, customizable AI with full management over latency or value.

- For the Neighborhood: Fashions are already out there on Hugging Face, Ollama, and extra—go from obtain to deployment in minutes.

How Does GPT-OSS Stack Up?

Right here’s the kicker: gpt-oss-120B is the primary freely out there open-weight mannequin that matches the efficiency of top-tier industrial fashions like o4-mini. The 20B variant not solely bridges the efficiency hole for on-device AI however will doubtless speed up innovation and push boundaries on what’s attainable with native LLMs.

The Future Is Open (Once more)

OpenAI’s GPT-OSS isn’t only a launch; it’s a clarion name. By making state-of-the-art reasoning, instrument use, and agentic capabilities out there for anybody to examine and deploy, OpenAI throws open the door to a complete group of makers, researchers, and enterprises—not simply to make use of, however to construct on, iterate, and evolve.

Take a look at the gpt-oss-120B, gpt-oss-20B and Technical Weblog. Be at liberty to take a look at our GitHub Web page for Tutorials, Codes and Notebooks. Additionally, be at liberty to observe us on Twitter and don’t neglect to hitch our 100k+ ML SubReddit and Subscribe to our E-newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.