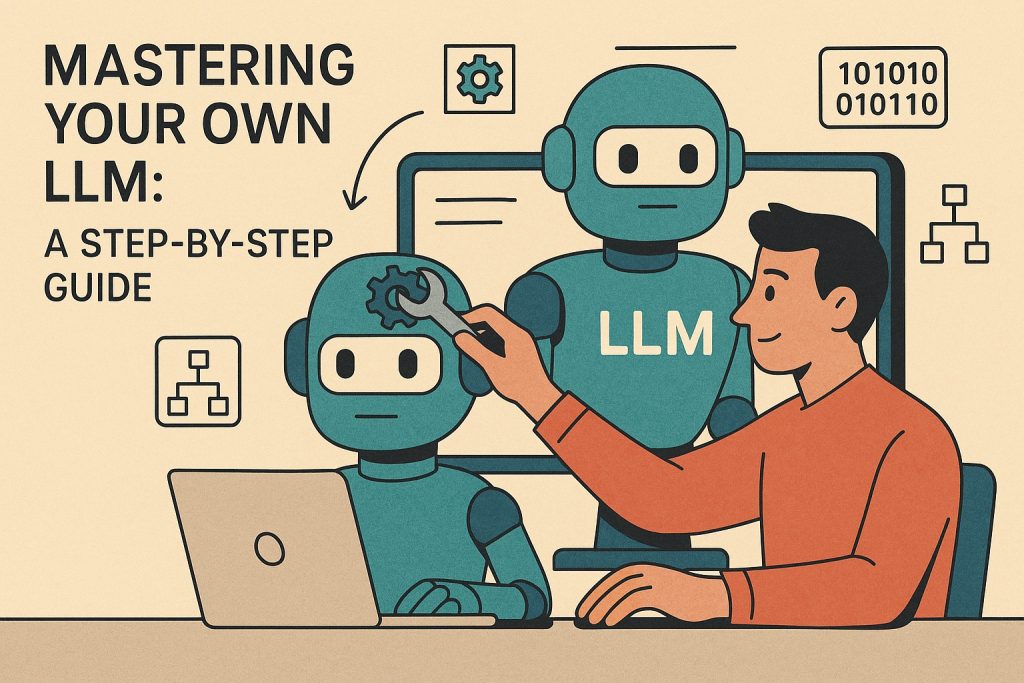

Mastering Your Personal LLM: A Step-by-Step Information

Mastering Your Personal LLM: A Step-by-Step Information is your final entry into the world of personalised synthetic intelligence. If you happen to’re involved concerning the privateness of cloud-based AI instruments like ChatGPT or Bard, you’re not alone. Curiosity in operating non-public, native massive language fashions (LLMs) is rising quick—and for good causes: higher knowledge privateness, full management over outputs, and no required web connection. Think about asking highly effective AI questions with out sending knowledge off to the cloud. This information will stroll you thru establishing your personal LLM, even for those who’re not a developer or tech wizard. Able to unlock the potential of your non-public AI assistant? Let’s start.

Additionally Learn: Run Your Personal AI Chatbot Regionally

Why Run an LLM Regionally?

There are vital advantages to internet hosting your personal massive language mannequin. For one, it places you in charge of your knowledge. Industrial AI instruments function on distant cloud servers, which means your enter—irrespective of how delicate—goes to third-party servers. Operating a mannequin in your private machine removes this danger.

Another excuse is value. Subscription charges for pro-level entry to AI APIs can add up over time. Internet hosting a localized mannequin, whereas requiring some preliminary setup and {hardware}, may remove ongoing prices.

Pace can be an element. An area LLM doesn’t depend on web connectivity, making it ultimate for duties in distant places or throughout outages. Builders, writers, researchers, and hobbyists alike are turning to this methodology for comfort and tailor-made performance.

Additionally Learn: 7 Important Expertise to Grasp for 2025

Selecting the Proper Mannequin for Your Wants

Not all LLMs are created equally. Earlier than diving into setup, it’s vital to evaluate what sort of duties you anticipate your mannequin to carry out. Some fashions are geared toward chat help, others for code completion or doc summarization.

For basic use, the preferred open-source mannequin at the moment is Meta’s LLaMA (Giant Language Mannequin Meta AI). Its variants—LLaMA 2 and LLaMA 3—are favored for providing excessive efficiency and are free for private use. You’ll additionally discover derivatives like Alpaca, Vicuna, and Mistral which are fine-tuned for particular duties.

Mannequin recordsdata are sometimes shared on-line in varied codecs comparable to GGUF (GPT-generated uniform file), which is optimized for reminiscence effectivity. These recordsdata can vary from below 2GB to over 30GB relying on complexity. Select properly based mostly in your {hardware} capabilities and supposed performance.

Additionally Learn: Set up an LLM on MacOS Simply

Putting in Key Software program: llama.cpp and Ollama

Operating an LLM requires specialised software program. Among the many most user-friendly and environment friendly instruments accessible at the moment is llama.cpp, a C++ implementation optimized for operating LLaMA fashions on consumer-grade CPUs.

Set up steps are usually simple:

- Obtain and set up the most recent construct of llama.cpp from a trusted GitHub supply.

- Acquire a suitable mannequin file (GGUF format beneficial) from a verified model-sharing hub like Hugging Face or TheBloke.ai.

- Insert the GGUF file into the designated llama.cpp fashions folder.

You may then entry the mannequin utilizing a command line terminal or scripts that automate interplay. This setup lets you chat instantly together with your chosen mannequin with none exterior server involvement.

For Mac customers operating Apple Silicon (M1, M2, M3 chips), llama.cpp works particularly nicely as a result of native {hardware} optimization. For these much less comfy utilizing terminal interfaces, Ollama is a user-friendly different. It gives a graphical interface and helps comparable mannequin codecs for faster setup.

Additionally Learn: Nvidia Launches New LLM Fashions for AI

Optimizing for Pace and Efficiency

Whereas high-end desktops with robust GPUs supply the most effective efficiency, fashionable LLMs are more and more optimized for CPU utilization. llama.cpp makes use of quantized fashions, which means mathematical precision is diminished in non-critical areas to enhance processing pace with out dropping high quality.

For finest outcomes, meet the next specs:

- Minimal of 8 GB RAM (16 GB is right)

- Apple Silicon M1 or newer (for Mac customers)

- Quad-core Intel or AMD CPU (for Home windows/Linux customers)

- Devoted SSD for sooner mannequin loading

Utilizing smaller quantized variations of fashions (4-bit or 5-bit) can considerably enhance execution time whereas sustaining usability for informal duties comparable to primary writing or knowledge summarization.

Enhancing Performance with Extensions

Operating an LLM by itself is highly effective, however you possibly can take functionality additional utilizing extensions. Some builders create wrappers or plugins to attach LLMs with instruments like internet browsers, PDF readers, or e-mail shoppers.

Widespread enhancements embrace:

- Context reminiscence: Save interplay historical past and permit the mannequin to recall earlier instructions

- Speech-to-text: Convert voice instructions into mannequin inputs

- APIs: Set off exterior functions like calendars or databases

These plugins typically require mild programming abilities to put in and customise, however many include tutorials and scripts to simplify utilization.

Staying Non-public and Secure

One of many major causes for establishing a neighborhood LLM is to make sure privateness. That doesn’t imply you possibly can calm down your safety posture. Maintain your laptop computer or desktop protected with antivirus software program and replace your working system recurrently to restrict vulnerabilities.

Solely obtain mannequin recordsdata and setup scripts from trusted sources. Run checksum verifications to make sure that recordsdata haven’t been altered. If you happen to’re utilizing wrappers or customized plugins, assessment the supply code your self or seek the advice of group boards to confirm security.

Offline utilization is your finest assurance of privateness. As soon as a mannequin is downloaded and arrange, you must be capable of disconnect from the web and proceed utilizing your LLM with out concern.

Widespread Troubleshooting Suggestions

Even with the most effective preparation, you could hit occasional snags throughout set up or mannequin execution. Some widespread points embrace:

- “Unlawful instruction” errors: These normally happen in case your CPU doesn’t help the instruction set used throughout compilation. Strive downloading an alternate construct.

- Mannequin masses however received’t reply: This sometimes outcomes from utilizing the unsuitable mannequin format. Make sure you’re utilizing GGUF or a supported variant.

- Sluggish response instances: Change to a lower-bit quantized mannequin, or verify that your system isn’t operating background-intensive packages.

Verify consumer communities on Reddit or GitHub discussions for quick options. Many of those platforms now characteristic lively customers sharing real-time solutions and setup ideas.

Operating Giant LLM’s

To run a Giant Language Mannequin (LLM) in your laptop utilizing Ollama, comply with the step-by-step information under. Ollama is a framework that lets you run varied LLMs regionally, comparable to GPT-style fashions, in your machine.

Stipulations:

- Mac or Linux (Home windows help coming quickly)

- {Hardware} Necessities:

- A pc with at the very least 8GB of RAM.

- Not less than 10GB of free disk house for fashions.

- Set up Docker (Ollama runs in a containerized atmosphere).

- Set up Docker from right here.

Step 1: Set up Ollama

To put in Ollama, comply with these directions:

- Obtain Ollama:

- Set up the appliance:

- On Mac, open the

.dmgfile and drag the Ollama app to your Functions folder. - On Linux, use the terminal to put in:

- Observe any extra setup steps from the installer.

- On Mac, open the

curl -sSL https://ollama.com/obtain | bashStep 2: Launch the Ollama Software

- Open Ollama out of your Functions folder on Mac or terminal on Linux.

- Verify if Ollama is operating correctly:

- Open a terminal and sort: This command ought to return the put in model of Ollama if the set up was profitable.

ollama --versionStep 3: Run a Mannequin with Ollama

Ollama helps operating a number of LLMs, comparable to GPT fashions. To run a mannequin, use the next steps:

- Open Terminal:

- Open the terminal or command line interface in your laptop.

- Listing Obtainable Fashions:

- You may see which fashions can be found by operating:

ollama fashions checklist

- You may see which fashions can be found by operating:

ollama fashions checklist- This can present you an inventory of accessible LLMs which you can run in your machine.

- Run a Particular Mannequin:

- To run a mannequin, you should use:

ollama run - Substitute

gpt-3orchatgpt). - Run the LLM in Interactive Mode:

- To begin an interactive session the place you possibly can chat with the mannequin, kind:

ollama run --interactive - This can open a terminal-based chat the place you possibly can kind messages, and the mannequin will reply interactively.

Step 4: Customise the Mannequin’s Habits

You may go sure parameters to customise the mannequin’s habits. For instance, you possibly can alter temperature (which controls creativity), or present particular directions for extra managed responses.

- Set Parameters:

- For instance, to regulate the temperature, you possibly can run:

ollama run --temperature 0.7 - Present a Customized Immediate:

- You too can present a customized immediate to the mannequin at first. For instance:

ollama run --prompt "Inform me about the way forward for AI." Step 5: Work together with Fashions through API (Optionally available)

- Run Ollama API:

- If you happen to’d wish to combine the mannequin with your personal code, you should use Ollama’s API. To begin the API server:

ollama api begin- Make API Calls:

- Now you can work together with the mannequin through HTTP requests, utilizing

curlor any HTTP consumer library in your code. For instance:

- Now you can work together with the mannequin through HTTP requests, utilizing

curl -X POST http://localhost:5000/v1/full -H "Content material-Kind: utility/json" -d '{"mannequin": "", "immediate": "Whats up, world!"}' Step 6: Monitor Useful resource Utilization (Optionally available)

Since LLMs may be resource-intensive, you possibly can monitor your system’s useful resource utilization to make sure easy efficiency.

- Monitor CPU/RAM utilization:

- On Mac, use Exercise Monitor.

- On Linux, use:

high- Optimize Efficiency:

- If the mannequin is just too sluggish or your system sources are overloaded, strive decreasing the variety of lively processes or adjusting the mannequin measurement.

Step 7: Troubleshooting

- Subject: Mannequin not operating:

- If the mannequin doesn’t load, guarantee your system meets the minimal {hardware} and software program necessities. Verify the logs for any errors utilizing:

ollama logs- Subject: Mannequin efficiency is low:

- Strive operating smaller fashions or closing different functions to liberate system sources.

Further Assets:

Conclusion: Your AI, Your Guidelines

Establishing your personal massive language mannequin is now not a job restricted to specialists. With improved instruments, optimized fashions, and detailed guides, anybody can make the most of native AI assistants. Whether or not you’re seeking to shield your knowledge, lower your expenses, or just experiment with one of the transformative applied sciences at the moment, operating your native LLM is a brilliant funding. Observe these steps to launch a private AI resolution that meets your privateness requirements and efficiency wants. Begin mastering your personal LLM at the moment and take management of your digital conversations.

References

Parker, Prof. Philip M., Ph.D. The 2025-2030 World Outlook for Synthetic Intelligence in Healthcare. INSEAD, 3 Mar. 2024.

Khang, Alex, editor. AI-Pushed Improvements in Digital Healthcare: Rising Tendencies, Challenges, and Functions. IGI International, 9 Feb. 2024.

Singla, Babita, et al., editors. Revolutionizing the Healthcare Sector with AI. IGI International, 26 July 2024.

Topol, Eric J. Deep Medication: How Synthetic Intelligence Can Make Healthcare Human Once more. Fundamental Books, 2019.

Nelson, John W., editor, et al. Utilizing Predictive Analytics to Enhance Healthcare Outcomes. 1st ed., Apress, 2021.

Subbhuraam, Vinithasree. Predictive Analytics in Healthcare, Quantity 1: Remodeling the Way forward for Medication. 1st ed., Institute of Physics Publishing, 2021.

Kumar, Abhishek, et al., editors. Evolving Predictive Analytics in Healthcare: New AI Methods for Actual-Time Interventions. The Establishment of Engineering and Know-how, 2022.

Tetteh, Hassan A. Smarter Healthcare with AI: Harnessing Army Medication to Revolutionize Healthcare for Everybody, All over the place. ForbesBooks, 12 Nov. 2024.

Lawry, Tom. AI in Well being: A Chief’s Information to Profitable within the New Age of Clever Well being Programs. 1st ed., HIMSS, 13 Feb. 2020.

Holley, Kerrie, and Manish Mathur. LLMs and Generative AI for Healthcare: The Subsequent Frontier. 1st ed., O’Reilly Media, 24 Sept. 2024.

Holley, Kerrie, and Siupo Becker M.D. AI-First Healthcare: AI Functions within the Enterprise and Medical Administration of Well being. 1st ed., O’Reilly Media, 25 Might 2021.