In recent times, the fast scaling of huge language fashions (LLMs) has led to extraordinary enhancements in pure language understanding and reasoning capabilities. Nevertheless, this progress comes with a big caveat: the inference course of—producing responses one token at a time—stays a computational bottleneck. As LLMs develop in dimension and complexity, the latency and vitality calls for for sequential token era change into substantial. These challenges are notably acute in real-world deployments, the place value, velocity, and scalability are vital. Conventional decoding approaches, equivalent to grasping or beam search strategies, usually require repeated evaluations of huge fashions, resulting in excessive computational overhead. Furthermore, even with parallel decoding methods, sustaining each the effectivity and the standard of generated outputs might be elusive. This state of affairs has spurred a seek for novel methods that may scale back inference prices with out sacrificing accuracy. Researchers have subsequently been exploring hybrid approaches that mix light-weight fashions with extra highly effective counterparts, striving for an optimum steadiness between velocity and efficiency—a steadiness that’s important for real-time functions, interactive programs, and large-scale deployment in cloud environments.

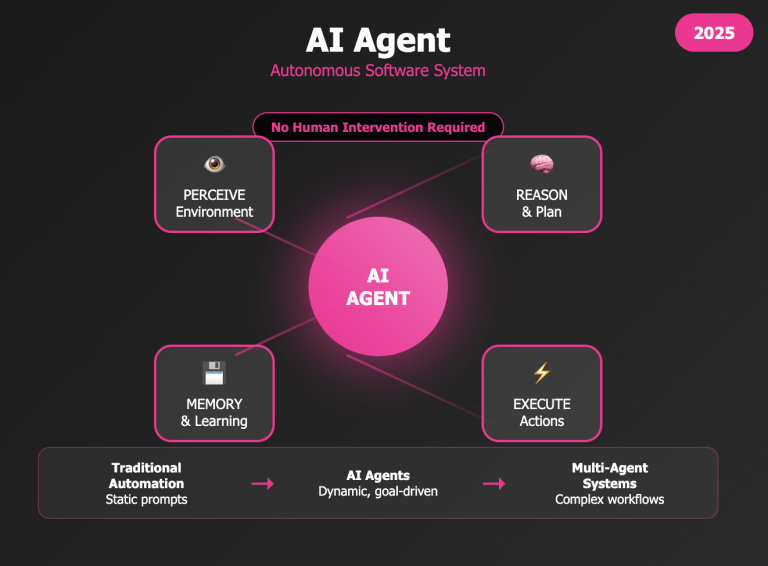

Salesforce AI Analysis Introduces Reward-Guided Speculative Decoding (RSD), a novel framework geared toward bettering the effectivity of inference in massive language fashions (LLMs). At its core, RSD leverages a dual-model technique: a quick, light-weight “draft” mannequin works in tandem with a extra sturdy “goal” mannequin. The draft mannequin generates preliminary candidate outputs quickly, whereas a course of reward mannequin (PRM) evaluates the standard of those outputs in actual time. Not like conventional speculative decoding, which insists on strict unbiased token matching between the draft and goal fashions, RSD introduces a managed bias. This bias is rigorously engineered to favor high-reward outputs—these deemed extra prone to be right or contextually related—thus considerably decreasing pointless computations. The strategy is grounded in a mathematically derived threshold technique that determines when the goal mannequin ought to intervene. By dynamically mixing outputs from each fashions primarily based on a reward perform, RSD not solely accelerates the inference course of but additionally enhances the general high quality of the generated responses. Detailed within the hooked up paper , this breakthrough methodology represents a big leap ahead in addressing the inherent inefficiencies of sequential token era in LLMs.

Technical Particulars and Advantages of RSD

Delving into the technical elements, RSD operates by integrating two fashions in a sequential but collaborative method. Initially, the draft mannequin produces candidate tokens or reasoning steps at a low computational value. Every candidate is then evaluated utilizing a reward perform, which acts as a high quality gate. If a candidate token’s reward exceeds a predetermined threshold, the output is accepted; if not, the system calls upon the extra computationally intensive goal mannequin to generate a refined token. This course of is guided by a weighting perform—usually a binary step perform—that adjusts the reliance on the draft versus the goal mannequin. The dynamic high quality management afforded by the method reward mannequin (PRM) ensures that solely probably the most promising outputs bypass the goal mannequin, thereby saving on computation. One of many standout advantages of this strategy is “biased acceleration,” the place the managed bias isn’t a detriment however moderately a strategic option to prioritize high-reward outcomes. This ends in two key advantages: first, the general inference course of might be as much as 4.4× sooner in comparison with operating the goal mannequin alone; second, it usually yields a +3.5 common accuracy enchancment over typical parallel decoding baselines. In essence, RSD harmonizes effectivity with accuracy—permitting for a considerable discount within the variety of floating-point operations (FLOPs) whereas nonetheless delivering outputs that meet and even exceed the efficiency of the goal mannequin. The theoretical underpinnings and algorithmic particulars, such because the combination distribution outlined by PRSD and the adaptive acceptance criterion, present a sturdy framework for sensible deployment in numerous reasoning duties.

Insights

The empirical validation of RSD is compelling. Experiments detailed within the paper reveal that, on difficult benchmarks equivalent to GSM8K, MATH500, OlympiadBench, and GPQA, RSD persistently delivers superior efficiency. As an example, on the MATH500 benchmark—a dataset designed to check mathematical reasoning—RSD achieved an accuracy of 88.0 when configured with a 72B goal mannequin and a 7B PRM, in comparison with 85.6 for the goal mannequin operating alone. Not solely does this configuration scale back the computational load by practically 4.4× fewer FLOPs, however it additionally enhances reasoning accuracy. The outcomes underscore the potential of RSD to outperform conventional strategies, equivalent to speculative decoding (SD) and even superior search-based methods like beam search or Greatest-of-N methods.

Conclusion: A New Paradigm for Environment friendly LLM Inference

In conclusion, Reward-Guided Speculative Decoding (RSD) marks a big milestone within the quest for extra environment friendly LLM inference. By intelligently combining a light-weight draft mannequin with a robust goal mannequin, and by introducing a reward-based acceptance criterion, RSD successfully addresses the twin challenges of computational value and output high quality. The progressive strategy of biased acceleration permits the system to selectively bypass costly computations for high-reward outputs, thereby streamlining the inference course of. The dynamic high quality management mechanism—anchored by a course of reward mannequin—ensures that computational assets are allotted judiciously, participating the goal mannequin solely when obligatory. With empirical outcomes displaying as much as 4.4× sooner inference and a mean accuracy enchancment of +3.5 over conventional strategies, RSD not solely paves the best way for extra scalable LLM deployments but additionally units a brand new commonplace within the design of hybrid decoding frameworks.

Try the Paper and GitHub Web page. All credit score for this analysis goes to the researchers of this undertaking. Additionally, be at liberty to comply with us on Twitter and don’t neglect to hitch our 75k+ ML SubReddit.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.