Multimodal LLMs: Increasing Capabilities Throughout Textual content and Imaginative and prescient

Increasing massive language fashions (LLMs) to deal with a number of modalities, significantly photos and textual content, has enabled the event of extra interactive and intuitive AI programs. Multimodal LLMs (MLLMs) can interpret visuals, reply questions on photos, and interact in dialogues that embody each textual content and photos. Their capacity to purpose throughout visible and linguistic domains makes them more and more priceless for functions equivalent to schooling, content material technology, and interactive assistants.

The Problem of Textual content-Solely Forgetting in MLLMs

Nevertheless, integrating imaginative and prescient into LLMs creates an issue. When skilled on datasets that blend photos with textual content, MLLMs typically lose their capacity to deal with purely textual duties. This phenomenon, often called text-only forgetting, happens as a result of visible tokens inserted into the language sequence divert the mannequin’s consideration away from the textual content. In consequence, the MLLM begins prioritizing image-related content material and performs poorly on duties that require solely language understanding, equivalent to fundamental reasoning, comprehension, or textual question-and-answer (Q&A) duties.

Limitations of Current Mitigation Methods

A number of strategies try to deal with this degradation. Some approaches reintroduce massive quantities of text-only information throughout coaching, whereas others alternate between text-only and multimodal fine-tuning. These methods goal to remind the mannequin of its unique language capabilities. Different designs embody adapter layers or prompt-based tuning. Nevertheless, these methods typically enhance coaching prices, require advanced switching logic throughout inference, or fail to revive textual content comprehension fully. The issue largely stems from how the mannequin’s consideration shifts when picture tokens are launched into the sequence.

Introducing WINGS: A Twin-Learner Method by Alibaba and Nanjing College

Researchers from Alibaba Group’s AI Enterprise staff and Nanjing College have launched a brand new strategy known as WINGS. The design provides two new modules—visible and textual learners—into every layer of the MLLM. These learners work in parallel with the mannequin’s core consideration mechanism. The construction resembles “wings” hooked up to both facet of the eye layers. A routing element controls how a lot consideration every learner receives based mostly on the present token combine, permitting the mannequin to stability its focus between visible and textual data dynamically.

Low-Rank Residual Consideration (LoRRA): Balancing Effectivity and Modality Consciousness

The WINGS structure makes use of a mechanism known as Low-Rank Residual Consideration (LoRRA), which retains computations light-weight whereas enabling the learners to seize important modality-specific data. Within the first stage of coaching, solely visible learners are activated to align picture options. Within the second stage, each visible and textual learners are co-trained with a router module that makes use of consideration weights to allocate accountability. Every learner makes use of environment friendly consideration blocks to work together with both the picture or the encircling textual content, and their outputs are mixed with these of the primary mannequin. This ensures that visible consideration doesn’t overwhelm textual understanding.

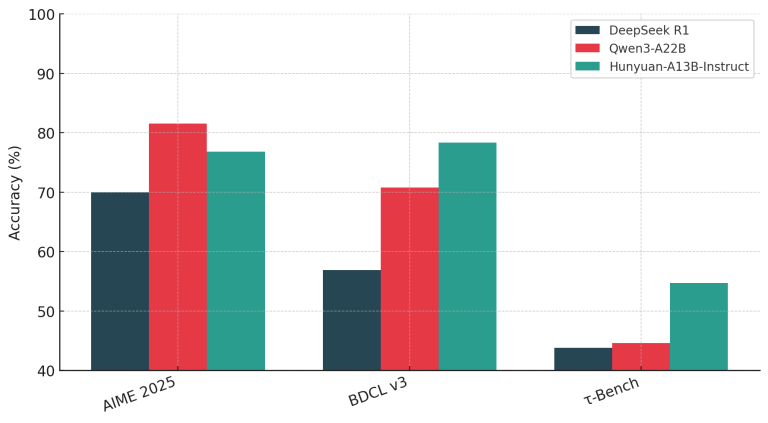

WINGS Efficiency Benchmarks Throughout Textual content and Multimodal Duties

When it comes to efficiency, WINGS confirmed sturdy outcomes. On the MMLU dataset, it achieved a text-only rating of 60.53, representing an enchancment of 9.70 factors in comparison with an identical baseline mannequin. For CMMLU, it scored 69.82, which is 9.36 factors increased than the baseline. In reasoning duties like Race-Excessive, it gained 11.9 factors, and in WSC, an enchancment of 11.12 factors was recorded. In multimodal benchmarks like MMMU-VAL, WINGS achieved an enchancment of 4.78 factors. It additionally demonstrated strong outcomes on the IIT benchmark, dealing with blended text-and-image multi-turn dialogues extra successfully than different open-source MLLMs on the identical scale.

Conclusion: Towards Extra Balanced and Generalizable MLLMs

In abstract, the researchers tackled the problem of catastrophic text-only forgetting in MLLMs by introducing WINGS, an structure that pairs devoted visible and textual learners alongside consideration routing. By analyzing consideration shifts and designing focused interventions, they maintained textual content efficiency whereas enhancing visible understanding, providing a extra balanced and environment friendly multimodal mannequin.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this challenge. Additionally, be happy to observe us on Twitter and don’t neglect to affix our 100k+ ML SubReddit and Subscribe to our Publication.

Nikhil is an intern marketing consultant at Marktechpost. He’s pursuing an built-in twin diploma in Supplies on the Indian Institute of Expertise, Kharagpur. Nikhil is an AI/ML fanatic who’s at all times researching functions in fields like biomaterials and biomedical science. With a robust background in Materials Science, he’s exploring new developments and creating alternatives to contribute.