OpenAI Whistleblowers Expose Safety Lapses

OpenAI whistleblowers have raised severe considerations about ignored safety incidents and inner practices. A public letter from former staff claims that over 1,000 inner safety points weren’t addressed. These allegations are actually prompting discussions about moral AI deployment, organizational accountability, and the broader want for enforceable security requirements within the synthetic intelligence sector.

Key Takeaways

- Former OpenAI staff allege neglect of over 1,000 security-related incidents throughout the group.

- Warnings relating to security dangers had been persistently ignored in pursuit of quicker product growth.

- Issues are rising about OpenAI’s dedication to accountable innovation, particularly when in comparison with different AI companies.

- Trade voices are urging authorities our bodies to extend regulatory oversight for superior AI applied sciences.

Contained in the Whistleblower Letter: Key Claims & Sources

The letter was signed by 9 former OpenAI workers, together with people who labored in governance, security, and coverage roles. Their message conveyed frustration with the group’s inner tradition, which they described as secretive and dismissive of security obligations. Signatories declare senior management didn’t act on particular points that would have impacted public security.

Daniel Kokotajlo, previously a part of the governance crew, said that he resigned attributable to dropping confidence in OpenAI’s capability to responsibly oversee its personal growth. The letter argues that restrictive non-disclosure agreements prevented people from voicing considerations internally or externally. The authors referred to as for the discharge of present and previous staff from these authorized restrictions, together with impartial audits to confirm the group’s security infrastructure.

The Alleged Safety Breaches: Knowledge & Context

Whereas the doc doesn’t element every of the alleged 1,000 incidents, it outlines classes of concern. These embrace:

- Publicity of delicate mannequin architectures and confidential coaching knowledge to unauthorized events.

- Inadequate surveillance and evaluation of potential abuse circumstances, comparable to these involving bioweapon analysis.

- Poor enforcement of red-teaming protocols established to establish unsafe behaviors in fashions like GPT-4 and OpenAI’s Sora.

These claims elevate alarm amongst specialists who imagine that AI labs ought to comply with strict protocols to make sure that superior programs function inside outlined security limits. If true, these points may pose important dangers and spotlight a failure to uphold OpenAI’s authentic mission to develop AGI for societal profit.

OpenAI’s Response: Official Statements & Background

In response to the whistleblower letter, OpenAI launched a press release reinforcing its dedication to ethics and accountable AI growth. The corporate acknowledged that absolute security is unrealistic however emphasised that inner governance buildings are in place. These embrace a Security Advisory Group that reviews findings on to the board.

OpenAI claims to advertise debate inside its groups and to conduct common threat assessments. However, critics argue that these mechanisms lack independence and transparency. This sentiment builds on a broader critique tied to OpenAI’s transition from nonprofit to profit-driven operations, which some imagine compromised its foundational values.

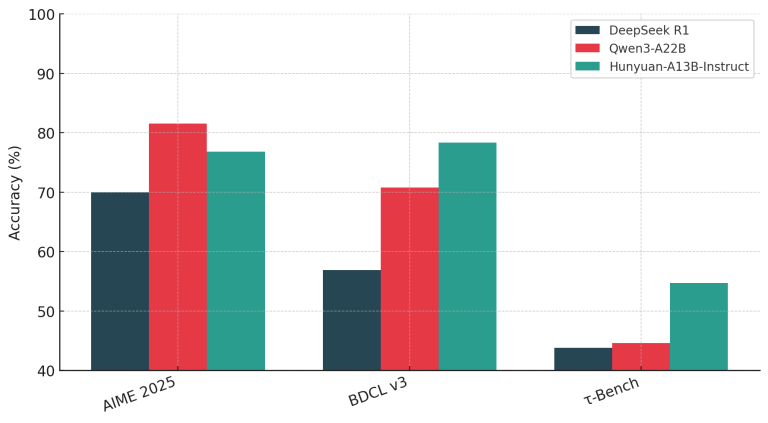

How OpenAI Compares: DeepMind vs. Anthropic

| AI Lab | Security Mechanisms | Public Accountability | Identified Safety Lapses |

|---|---|---|---|

| OpenAI | Inner Governance, Threat Overview, Purple Teaming | Selective Transparency | Over 1,000 alleged incidents reported by whistleblowers |

| Google DeepMind | Ethics Models, Exterior Overview Boards | Common safety-related communications | No main reviews |

| Anthropic | Constitutional AI, Devoted Security Staff | Detailed security publications and roadmap | Unconfirmed |

This comparability means that OpenAI at the moment stands out for damaging causes. Whereas friends publish frequent updates and conduct third-party evaluations, OpenAI’s practices seem extra insular. Issues have escalated since 2023, when it started limiting transparency associated to large-scale mannequin security efficiency.

Regulatory Repercussions: What’s Subsequent?

Governments and oversight our bodies are actually reassessing easy methods to regulate frontier AI programs. Whistleblower reviews like this are accelerating coverage momentum round enforceable security requirements.

Present Regulatory Actions:

- European Union: The EU AI Act targets basis fashions below stringent high-risk clauses, requiring incident disclosure and common audits.

- United States: NIST is creating an AI Threat Administration Framework, whereas the federal authorities has shaped the US AI Security Institute.

- United Kingdom: The UK is facilitating cooperation by industry-led security pointers following its latest AI Security Summit.

Policymakers are drawing classes from these ongoing conditions and are more likely to mandate extra frequent enforcement of oversight procedures, together with whistleblower protections and exterior verification of security claims.

Skilled Perception: Trade Opinions on AI Security Tradition

Dr. Rama Sreenivasan, a researcher related to Oxford’s Way forward for Humanity Institute, emphasised that centralized growth fashions can’t self-govern successfully when pursuing industrial positive aspects. He urged the institution of exterior security enforcement channels.

Supporting that view, former FTC advisor Emeka Okafor famous that the disclosures may form future laws that features enforceable rights for whistleblowers and necessities for transparency in mannequin conduct. This comes as extra public consideration focuses on reviews that OpenAI’s mannequin reveals self-preservation techniques, elevating long-term coverage and moral implications.

A ballot performed by Morning Seek the advice of in Might 2024 revealed that over half of U.S. adults belief OpenAI lower than they did six months earlier than. Practically 70 p.c help the formation of an impartial AI security board with the authority to audit and regulate high-risk programs.

Conclusion: What This Tells Us About AI Security Tradition

OpenAI continues to guide in AI capabilities, however the points raised by whistleblowers spotlight deep structural issues in how security is dealt with. Whereas different organizations keep seen security buildings, OpenAI’s practices seem opaque and risk-driven. These revelations align with earlier investigations, such because the one exploring surprising flaws unearthed in OpenAI’s Sora video.

The subsequent section will seemingly decide whether or not the corporate can restore belief by reform and transparency or if exterior regulators should step in to implement compliance. The rising highlight on OpenAI’s inner dynamics and security tradition means that each {industry} and authorities actors are gearing up for a extra assertive regulatory stance.

FAQ: Understanding the Whistleblower Allegations

What did the OpenAI whistleblowers allege?

They said that OpenAI declined to handle over 1,000 identified inner safety points and prevented workers from talking out by imposing strict non-disclosure agreements.

Has OpenAI responded to the whistleblower claims?

Sure. The corporate mentioned that it stays dedicated to AI security and that inner governance fashions already deal with threat appropriately.

How does OpenAI deal with AI security at present?

It makes use of groups devoted to inner threat assessments and selective red-teaming. Critics argue that extra impartial evaluations are required.

What regulatory actions are being taken towards AI corporations?

International efforts are underway. The EU AI Act and the US AI Security Institute are two major examples advancing standardization and oversight of AI programs.

References

- The Washington Publish – OpenAI Whistleblowers Warn of ‘Tradition of Secrecy’

- Brynjolfsson, Erik, and Andrew McAfee. The Second Machine Age: Work, Progress, and Prosperity in a Time of Sensible Applied sciences. W. W. Norton & Firm, 2016.

- Marcus, Gary, and Ernest Davis. Rebooting AI: Constructing Synthetic Intelligence We Can Belief. Classic, 2019.

- Russell, Stuart. Human Appropriate: Synthetic Intelligence and the Drawback of Management. Viking, 2019.

- Webb, Amy. The Large 9: How the Tech Titans and Their Considering Machines Might Warp Humanity. PublicAffairs, 2019.

- Crevier, Daniel. AI: The Tumultuous Historical past of the Seek for Synthetic Intelligence. Primary Books, 1993.