Apple AI Transforms Navigation for Blind

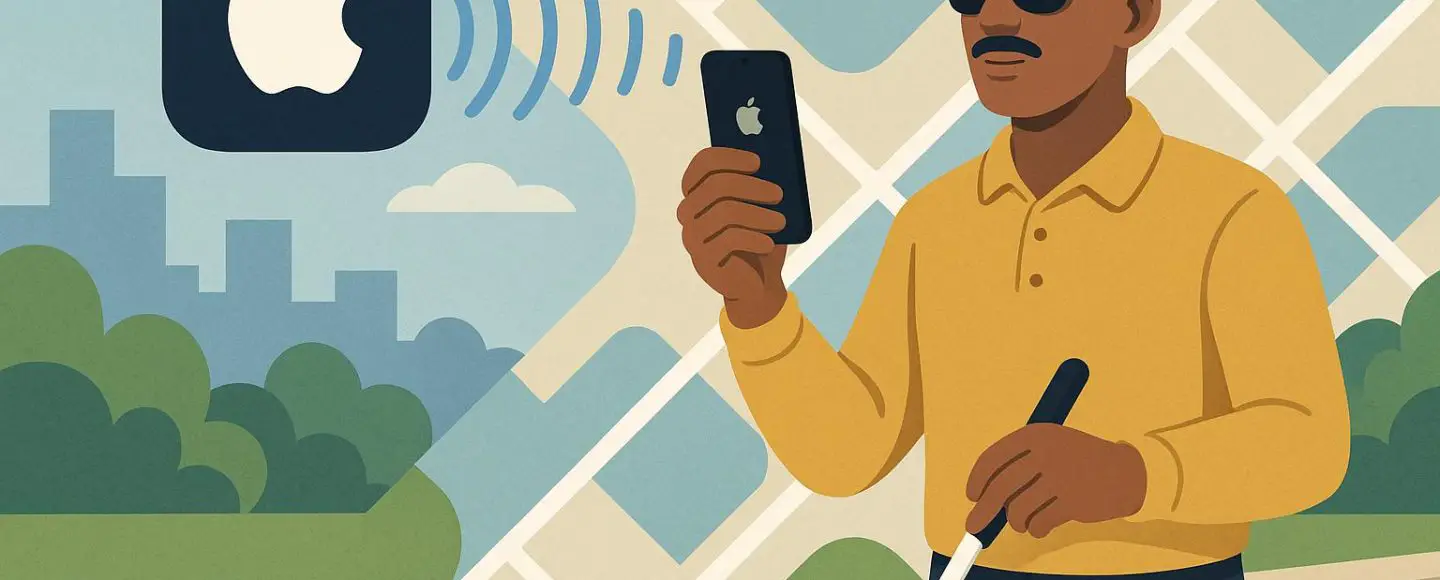

Apple AI Transforms Navigation for Blind is a robust testomony to how cutting-edge know-how can redefine independence for visually impaired people. With a basis constructed on synthetic intelligence, Apple has developed a spatial navigation system that successfully eliminates lots of the on a regular basis challenges confronted by blind and low imaginative and prescient pedestrians. By integrating visible imagery, GPS information, and safe anonymized datasets, Apple’s mannequin delivers richly contextual street-level data akin to intersections, sidewalks, signage, and site visitors alerts. This development not solely demonstrates technological excellence but in addition marks a transformative step in accessibility innovation.

Key Takeaways

- Apple’s AI-based system enhances city navigation for blind and visually impaired customers by combining GPS information with street-level imagery.

- The know-how considerably outperforms earlier fashions in spatial consciousness and multi-modal navigation duties.

- Information privateness is a prime precedence in Apple’s AI improvement, strengthened by rigorous anonymization protocols and moral compliance.

- This initiative aligns with Apple’s broader accessibility agenda and alerts a shift in the way forward for assistive AI design.

Understanding Apple’s AI Navigation Breakthrough

Apple’s new AI mannequin represents a serious leap ahead in accessible navigation. The system is designed to help blind and visually impaired customers in recognizing key avenue options, akin to site visitors lights, sidewalks, highway crossings, and landmark proximity, via multi-modal inputs like satellite tv for pc imagery, street-level photographs, and GPS data. With this mixed information, the AI creates a context-aware mannequin of the consumer’s atmosphere that may ship real-time, accessible directions.

This know-how diverges from conventional turn-by-turn steering techniques. It contextualizes environment with spatial consciousness and concrete semantics, which is important for non-visual vacationers. The target will not be solely to assist navigate from level A to level B however to empower customers with a complete understanding of the trail forward.

How Apple AI Navigation for Blind Works

At its core, the Apple AI navigation for blind people makes use of a fusion of visible recognition and geolocation. The system is educated on huge datasets composed of anonymized imagery from city areas. With machine studying strategies utilized to cityscapes, the AI can detect key indicators akin to curb cuts, stairs, pedestrian alerts, cease indicators, and constructing boundaries.

The AI processes this data to construct a semantic map that dietary supplements GPS with important accessibility particulars. For example, it might probably distinguish between a crosswalk with audio alerts and one with out. This produces real-time insights that information customers safely and effectively. The system could also be built-in inside wearable gadgets or via the iOS ecosystem, increasing entry throughout Apple platforms. For a deeper take a look at how Apple leverages AI in private gadgets, discover how Siri is enhanced by synthetic intelligence.

Comparative Accessibility: Apple vs. Microsoft vs. Google

| Function | Apple AI Navigation | Microsoft Seeing AI | Google Lookout |

|---|---|---|---|

| Core Performance | Avenue-level navigation with multi-modal spatial consciousness | Object and scene description by way of digicam | Merchandise recognition, foreign money detection, textual content studying |

| AI Structure | Visible-GPS semantic mapping | Object recognition and OCR | Picture-to-text evaluation with contextual cues |

| Navigation Assist | Sure, with stay avenue characteristic detection | No real-time navigation | Restricted to object location |

| Integration | iOS + location-based companies | Obtainable on iOS and Android | Android solely |

Actual-World Use Eventualities and Person Implications

Discipline testing of Apple’s AI mannequin suggests a right away worth proposition for visually impaired customers in city facilities. Potential situations embrace:

- Navigating complicated intersections with inconsistent audible site visitors alerts

- Adjusting strolling paths throughout building or detours

- Figuring out entrances to public buildings or companies primarily based on proximity

- Safely maneuvering via high-density areas akin to transit stations or marketplaces

These developments can complement present options. One progressive instance is the usage of AI-powered smartglasses for the visually impaired, which provide hands-free help as customers transfer via unfamiliar environments.

Past particular person influence, the system presents alternatives for city-wide inclusivity. It may inform city planning choices with data-driven insights about pedestrian site visitors and danger zones affecting the blind group.

Moral Design and Information Privateness in Growth

One of many hallmark components of Apple’s engineering philosophy is its emphasis on privateness. True to kind, the navigation AI adheres to strict tips for anonymization, use limitations, and moral coaching protocols. All datasets are stripped of identifiable markers. Person interplay information stays confined inside the gadget or encrypted storage. No photos are linked to people, particular addresses, or behavioral patterns.

This construction helps compliance with world AI ethics initiatives, together with the EU’s AI Act and NIST’s danger administration rules. To be taught extra about how Apple is advancing accountable AI, contemplate studying about Apple’s moral AI framework.

The State of Accessible Navigation: 2023–2024 Statistics

- The World Well being Group studies over 253 million folks worldwide expertise reasonable to extreme imaginative and prescient impairment.

- In america, 7.6 million adults are estimated to have visible disabilities that have an effect on mobility (Nationwide Federation of the Blind).

- Greater than 48 % of blind adults often use assistive know-how. Nonetheless, many areas lack satisfactory spatial information for secure navigation.

- City pedestrian accident charges stay considerably greater for blind people in cities with out tailor-made accessibility infrastructure.

These information factors spotlight the significance of scalable instruments like Apple’s navigation system, which have to be designed with privateness and inclusivity as core rules.

“This type of AI-powered spatial consciousness may result in common design rules being embedded in each {hardware} and public infrastructure,” says Lina Rodriguez, a UX researcher targeted on inclusive know-how. “The wonderful thing about Apple’s method is that it doesn’t require customers to be taught fully new techniques. It may plug into the instruments they’re already comfy with.”

Voice assistants and environment-aware gadgets could proceed to evolve. Future variations may combine with good metropolis infrastructure or adaptive mobility options, making the imaginative and prescient of a blind-accessible world extra attainable. In associated breakthroughs, firms have even experimented with the event of a robotic designed to navigate with out sight, showcasing how machines will be educated for mobility challenges confronted by blind customers.

Ceaselessly Requested Questions

How is Apple utilizing AI to assist the blind?

Apple’s AI navigation system makes use of GPS and street-level imagery to know and relay vital real-world environmental cues. It gives visually impaired customers with detailed interpretations of intersections, site visitors lights, sidewalks, and extra. This helps promote safer and extra impartial journey.

What accessibility instruments does Apple supply?

Apple gives many accessibility options, together with VoiceOver display screen readers, haptic suggestions, display screen magnification, and real-time audio descriptions. The brand new AI navigation mannequin enhances this ecosystem by providing real-world spatial steering for blind customers.

How do blind folks at the moment navigate the streets?

Blind people usually use a mixture of white canes, information canine, and mobility coaching. Apps like VoiceOver and Aira assist navigation however usually lack dependable environmental consciousness in actual time.

What are the challenges in pedestrian navigation for the visually impaired?

Boundaries embrace unpredictable site visitors alerts, minimal tactile sidewalk steering, sudden building with no various routes, and difficulties judging site visitors primarily based on sound alone. These dangers restrict full autonomy with out superior aids.

Conclusion

Apple’s innovation in AI-driven, street-level navigation represents a serious turning level in inclusive mobility. By simplifying the best way blind people work together with their atmosphere, the know-how provides greater than comfort. It gives dignity and self-sufficiency. As Apple continues refining its AI applied sciences, new potentialities are rising for equitable city entry. For a broader overview of how Apple is positioning itself in synthetic intelligence, go to insights on machine studying, accessibility, and on-device intelligence at apple.com/analysis.

These developments spotlight Apple’s dedication to integrating AI in ways in which prioritize consumer privateness, contextual relevance, and common design. The way forward for city mobility will rely not simply on smarter cities, however on smarter, extra empathetic know-how.