DeepSeek’s latest replace on its DeepSeek-V3/R1 inference system is producing buzz, but for individuals who worth real transparency, the announcement leaves a lot to be desired. Whereas the corporate showcases spectacular technical achievements, a better look reveals selective disclosure and essential omissions that decision into query its dedication to true open-source transparency.

Spectacular Metrics, Incomplete Disclosure

The discharge highlights engineering feats reminiscent of superior cross-node Skilled Parallelism, overlapping communication with computation, and manufacturing stats that declare to ship exceptional throughput – for instance, serving billions of tokens in a day with every H800 GPU node dealing with as much as 73.7k tokens per second. These numbers sound spectacular and recommend a high-performance system constructed with meticulous consideration to effectivity. Nevertheless, such claims are introduced and not using a full, reproducible blueprint of the system. The corporate has made components of the code out there, reminiscent of customized FP8 matrix libraries and communication primitives, however key parts—just like the bespoke load balancing algorithms and disaggregated reminiscence methods—stay partially opaque. This piecemeal disclosure leaves impartial verification out of attain, in the end undermining confidence within the claims made.

The Open-Supply Paradox

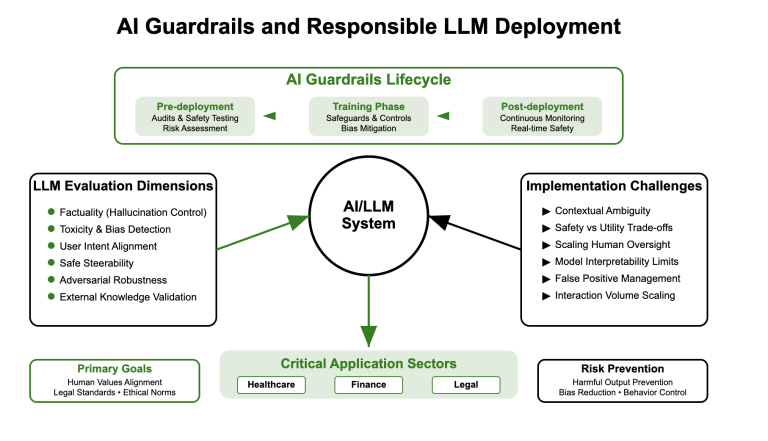

DeepSeek proudly manufacturers itself as an open-source pioneer, but its practices paint a special image. Whereas the infrastructure and a few mannequin weights are shared below permissive licenses, there’s a obvious absence of complete documentation concerning the information and coaching procedures behind the mannequin. Essential particulars—such because the datasets used, the filtering processes utilized, and the steps taken for bias mitigation—are notably lacking. In a neighborhood that more and more values full disclosure as a way to evaluate each technical advantage and moral issues, this omission is especially problematic. With out clear knowledge provenance, customers can’t absolutely consider the potential biases or limitations inherent within the system.

Furthermore, the licensing technique deepens the skepticism. Regardless of the open-source claims, the mannequin itself is encumbered by a customized license with uncommon restrictions, limiting its industrial use. This selective openness – sharing the much less essential components whereas withholding core parts – echoes a development referred to as “open-washing,” the place the looks of transparency is prioritized over substantive openness.

Falling In need of Trade Requirements

In an period the place transparency is rising as a cornerstone of reliable AI analysis, DeepSeek’s method seems to reflect the practices of business giants greater than the beliefs of the open-source neighborhood. Whereas corporations like Meta with LLaMA 2 have additionally confronted criticism for restricted knowledge transparency, they a minimum of present complete mannequin playing cards and detailed documentation on moral guardrails. DeepSeek, in distinction, opts to focus on efficiency metrics and technological improvements whereas sidestepping equally vital discussions about knowledge integrity and moral safeguards.

This selective sharing of knowledge not solely leaves key questions unanswered but in addition weakens the general narrative of open innovation. Real transparency means not solely unveiling the spectacular components of your expertise but in addition partaking in an trustworthy dialogue about its limitations and the challenges that stay. On this regard, DeepSeek’s newest launch falls brief.

A Name for Real Transparency

For fanatics and skeptics alike, the promise of open-source innovation must be accompanied by full accountability. DeepSeek’s latest replace, whereas technically intriguing, seems to prioritize a sophisticated presentation of engineering prowess over the deeper, more difficult work of real openness. Transparency isn’t merely a guidelines merchandise; it’s the basis for belief and collaborative progress within the AI neighborhood.

A really open undertaking would come with a whole set of documentation—from the intricacies of system design to the moral issues behind coaching knowledge. It might invite impartial scrutiny and foster an surroundings the place each achievements and shortcomings are laid naked. Till DeepSeek takes these extra steps, its claims to open-source management stay, at greatest, solely partially substantiated.

In sum, whereas DeepSeek’s new inference system could nicely characterize a technical leap ahead, its method to transparency suggests a cautionary story: spectacular numbers and cutting-edge strategies don’t mechanically equate to real openness. For now, the corporate’s selective disclosure serves as a reminder that on the planet of AI, true transparency is as a lot about what you allow out as it’s about what you share.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.